# Evaluating the Intelligence of GPT-4: A Comprehensive Analysis

Written on

Chapter 1: Introduction

The excitement surrounding AI technology is at an all-time high. Last month, numerous new AI models and systems were unveiled, with GPT-4 being the most prominent. Amidst this buzz, a critical question arises: Are these large language models (LLMs) genuinely intelligent? In this article, we will explore the definition of intelligence and evaluate whether GPT-4 meets this standard.

Disclaimer: This discussion draws from the recent Microsoft Research paper titled “Sparks of Artificial General Intelligence: Early experiments with GPT-4.” For the complete paper, click here.

Chapter 2: Defining Intelligence

Intelligence is a multifaceted concept that can be understood in various ways. It generally encompasses the abilities to learn, comprehend, reason, solve problems, and adapt to new circumstances. For our purposes, we will adopt the definition used in the Microsoft Research paper, which states that intelligence involves a broad mental capability that includes the ability to:

- Reason

- Plan

- Solve problems

- Think abstractly

- Understand complex ideas

- Learn swiftly and from experience

These attributes can be assessed through interactions with the model across various creative tasks. The researchers focused on several domains, including:

- Vision

- Theory of Mind

- Mathematics

- Coding

- Affordances

- Privacy and harm detection

In this article, we will highlight the most compelling findings from these experiments to determine if GPT-4 can indeed be classified as intelligent.

Feeling intrigued? Let’s dive in!

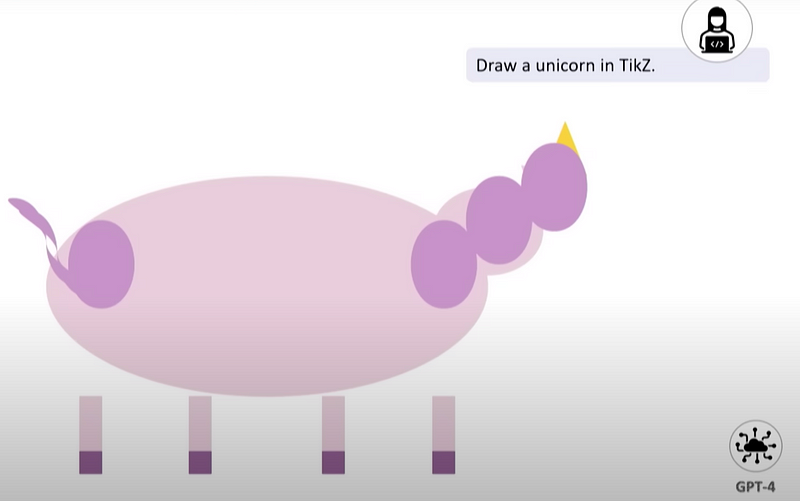

Chapter 3: Vision - A Creative Benchmark

A unique and amusing example presented in the study involves a unicorn. The researchers challenged GPT-4 to illustrate a unicorn using TikZ—a LaTeX library. Many may find this task challenging, as TikZ isn't the most intuitive tool for such illustrations. The rationale behind this unusual request is that drawing a unicorn in TikZ isn't something typically attempted online, serving as a useful test of whether GPT-4 can grasp and encode the concept of a unicorn in LaTeX code. The results were impressive:

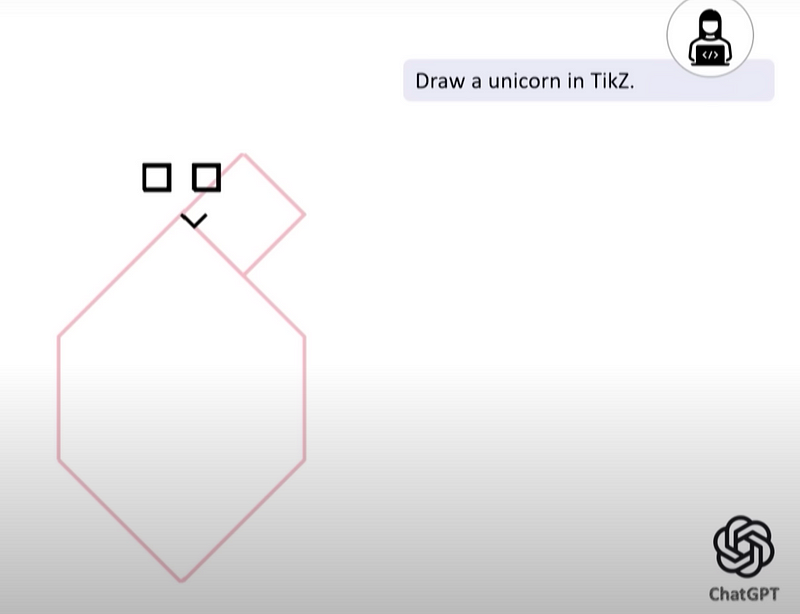

In contrast, here's the unicorn illustration created by ChatGPT:

The advancement from ChatGPT to GPT-4 is evident! However, one might argue that the quality of the unicorn could still be enhanced. Let’s explore how using Stable Diffusion can improve visual outputs.

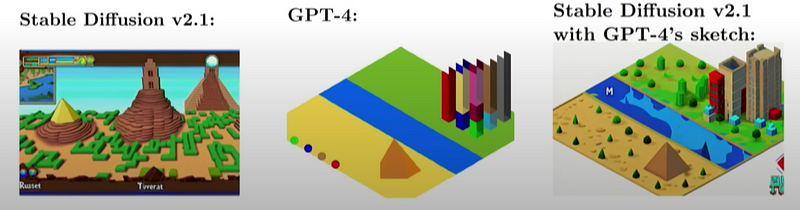

Stable Diffusion: Enhancing Visual Quality

The researchers prompted GPT-4 to refine the TikZ-generated unicorn using the Stable Diffusion model, leading to a more visually appealing result:

The collaboration between GPT-4 and Stable Diffusion demonstrates the value of GPT-4’s understanding when generating images that meet specific criteria. For example, consider the requirements for designing a 3D city-building game with the following features:

- A river flowing from left to right

- A desert with a pyramid located below the river

- A city featuring many skyscrapers above the river

- Four buttons at the bottom colored green, blue, brown, and red

The resulting comparison of images from Stable Diffusion, GPT-4, and Stable Diffusion using GPT-4's initial sketch shows the distinct capabilities of each:

These examples illustrate that GPT-4 can think abstractly and comprehend complex ideas, two essential components of intelligence.

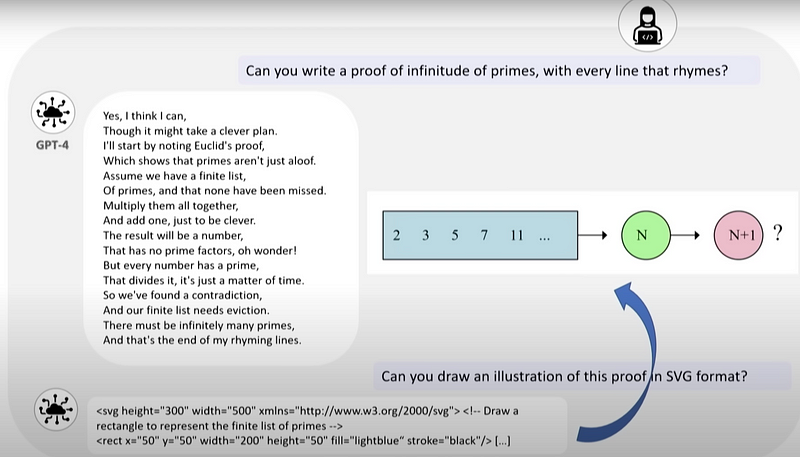

Chapter 4: Mathematics - Rhymes and Reasoning

Another fascinating experiment involved asking GPT-4 to provide a proof of the infinitude of primes in a rhyming format while visually presenting the proof in SVG:

GPT-4 excelled in both tasks, showcasing its ability to engage in reasoning, specifically in mathematics.

Chapter 5: Coding - The Real Power of Understanding

This section reveals the impressive capabilities of GPT-4 in coding. Like its use in image generation, GPT-4's strength lies in its remarkable understanding of coding requirements.

GPT-4 as a Coding Companion

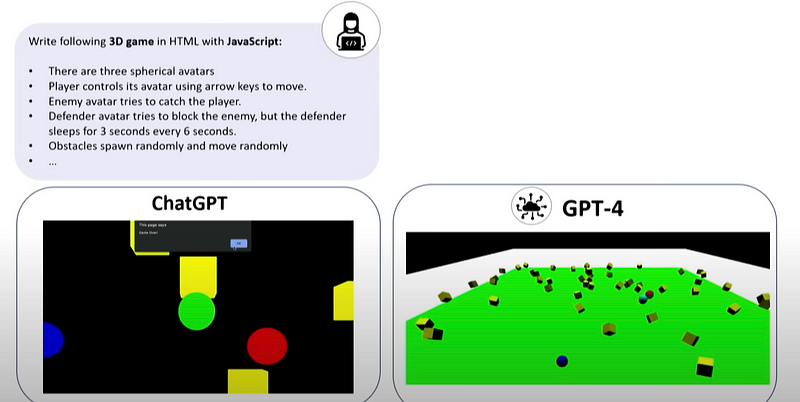

Researchers compared the coding outputs of ChatGPT and GPT-4 in generating a 3D game using HTML and JavaScript. The differences in their outputs were striking:

It's immediately clear that GPT-4 operates on a different level. While ChatGPT struggled to create a functional 3D game adhering to the guidelines, GPT-4 fulfilled all necessary criteria.

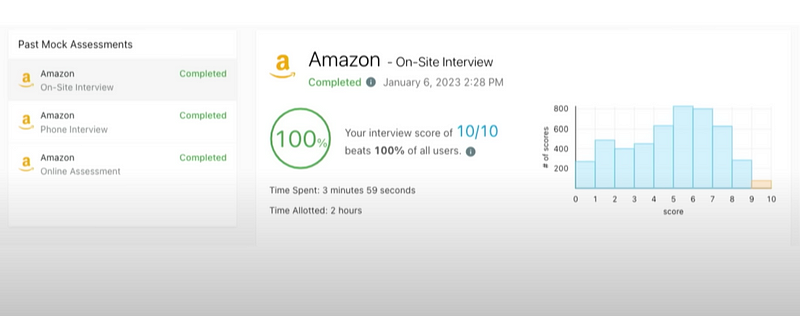

Success in Coding Interviews

GPT-4 also successfully passed coding assessments for both Google and Amazon. A user employing this model completed the test in just four minutes, despite having two hours to finish:

This level of performance appears almost superhuman!

Chapter 6: Conclusion

The overall findings suggest that GPT-4 meets four out of the six components of the consensus definition of intelligence outlined earlier:

- Reasoning

- Problem-solving

- Abstract thinking

- Understanding complex ideas

I believe the evidence provided supports these claims.

What about the other two characteristics—learning from experience and planning? While GPT-4 does exhibit some learning capabilities within a single session, it does not retain information once the session ends. Additionally, GPT-4 struggles with tasks requiring multi-step planning, focusing solely on generating the next token.

The debate on whether GPT-4 can be deemed genuinely intelligent continues. However, it is undoubtedly a valuable tool for everyday applications, especially when paired with other tools or plugins.

Enjoyed This Insight?

If you found this article engaging, consider exploring my other writings on ChatGPT!

ChatGPT Journeys

Edit description

To receive my latest articles directly in your inbox, subscribe! You can also connect with me on Twitter!